As the world continues its shift away from fossil fuels and non-renewable energy, the demand for better and longer-lasting lithium-ion batteries is growing at an unprecedented rate. However, the increase in battery capacity comes with increased risks, as evidenced by the rise in news about battery fires and explosions linked to thermal runaway events becoming more common. As a result, testing has been deemed fundamental in order to produce reliable batteries. We have previously compared different testing methodologies, including environmental chambers and isothermal calorimetry. In this blog post, we explore adiabatic calorimetry, a powerful method for studying the internal behavior of batteries during failure scenarios.

Why Adiabatic Calorimetry is Ideal for Battery Safety Testing?

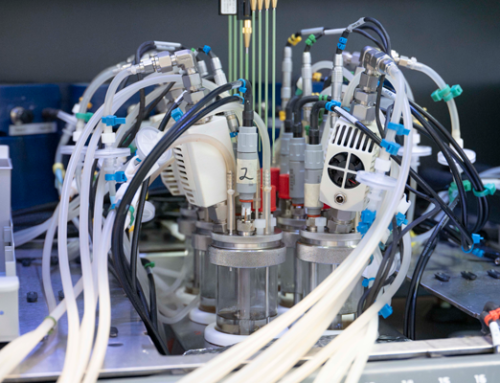

To understand adiabatic calorimetry, it helps to first think what happens inside a battery during the process of charge and discharge. The simplest battery assembly includes a cathode, anode, and electrolyte. In more advance batteries there are additional components, such as solid-electrolyte interface (SEI) or thermal management systems.

Some of these components are thermally sensitive. When a battery is exposed to abuse conditions, such as overcharging, short circuits, or high external temperatures – its components can undergo decomposition reactions – cleaving of the chemicals into smaller molecules. These reactions generally exothermic, releasing heat as they progress, but also, in some cases, gases. As a result, it increases the internal temperature and pressure. If the process progresses without being controlled, the reactions can cascade into a thermal runaway.

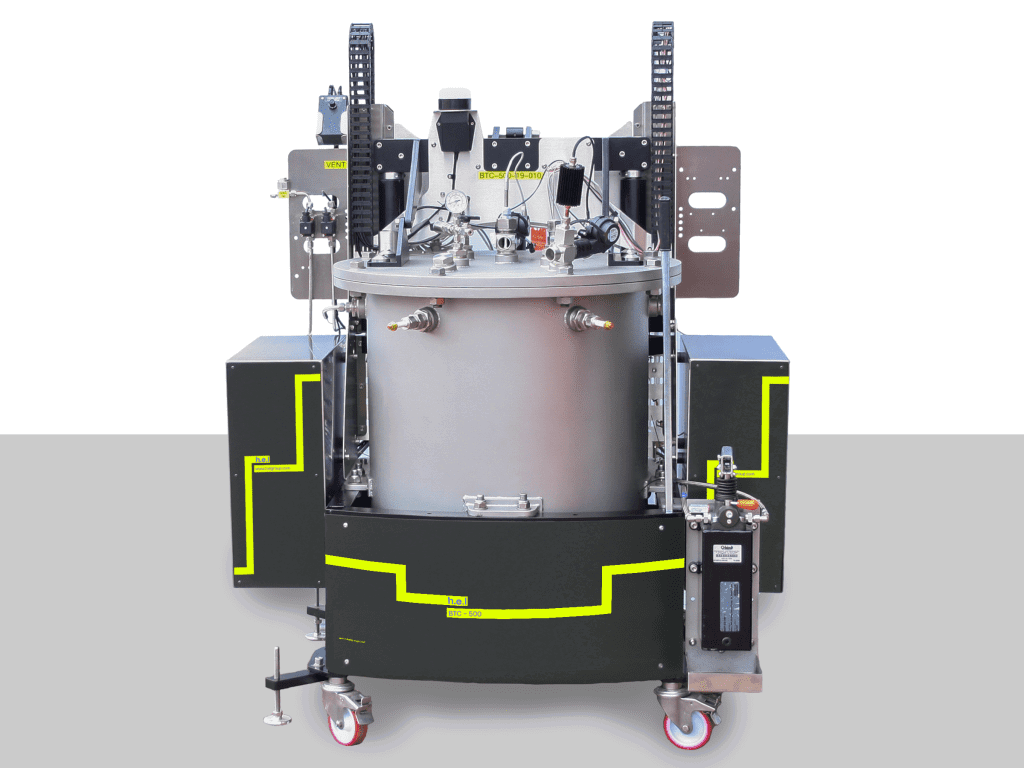

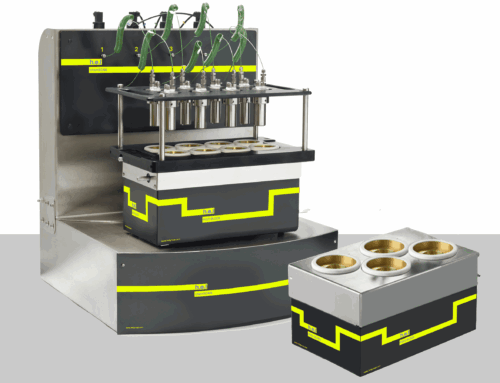

This is where adiabatic calorimetry reigns supreme. Adiabatic calorimeters measure heat exchange, adjusting temperatures to ensure that there is no heat loss to the environment, which is a very powerful tool to understand what would happen to the battery in the worst possible scenario. In this case, as the heat does not dissipate, the accumulation results in a temperature rise in the battery. Other methodologies, such as differential scanning calorimetry, thermal gravimetry analysis, or environmental chambers, rely on external heating. Adiabatic calorimetry, as a consequence, is ideal for capturing the self-heating behavior of batteries. Therefore, this type of testing offers insights into the worst-case scenario, instead of ideal conditions, answering the question “if the battery fails, how bad can it get?”

Thermal runaways occur when the heat generation exceeds the capacity of the battery to dissipate heat, leading to an uncontrolled temperature rise. Adiabatic calorimeters can help characterize this process by measuring three important values:

- Onset temperature: the temperature at which the temperature increase accelerates.

- Self-heating rates: how quickly the temperature rises under adiabatic conditions

- Pressure evolution: decomposition reactions result in the production of gas, which can cause cell rupture or explosion.

Understanding these value allows engineers to predict and mitigate failure scenarios knowing when, how fast, and why this thermal runaways occur, and using that insight to improve designs.

Adiabatic battery testing: a growing trend

Adiabatic calorimetry provides unique insights into battery chemistry, such as the behavior of the electrolyte and electrodes under thermal stress, and the progression of decomposition reactions, which result in the production of gas and pressure buildup. The data obtained from adiabatic calorimetry does not just confirm that a battery is safe or unsafe. It provides detailed thermal profiles that can be used to optimize battery systems in several ways:

- Battery management system (BMS) development: Fine-tune safety cutoffs and response times based on onset temperatures and heating rates

- Materials selection: Choose chemistries with higher stability or delayed decomposition thresholds

- SOC-specific design: Understand how different charge levels impact runaway risk, and adapt usage recommendations accordingly

- Safety integration: Design smarter venting systems, thermal insulation, and extinguishing strategies based on expected pressure and gas release

In essence, adiabatic calorimetry not only helps to prevent dangerous scenarios, but it helps engineers design them out of the system entirely.

Battery safety is one of the most critical challenges in modern energy storage. There is a demand for electric vehicles, consumer electronics, and grid-scale storage. Adiabatic calorimetry testing is enforced in testing for batteries, like GBT 36276-2023 in China, whereas in other places it is a recommendation, such as the SAND2017-6925 in the US. Internationally, more testing standards are incorporating or referencing adiabatic data for compliance and risk assessment.

As regulatory frameworks evolve, this type of in-depth calorimetric analysis is becoming essential for market access and product certification.

Adiabatic calorimetry: a crucial technique for the next generation of batteries

The push for higher-performing, faster-charging, and longer-lasting batteries does not need to come with downsides like increased risk. Instead, as the world leans more heavily on energy storage, from vehicles to buildings to grids, battery safety research must evolve in parallel.

Adiabatic calorimetry provides researchers and engineers with the crucial thermal and pressure data required to understand battery failure and its consequences, not in theory, but in the real, dangerous moments that matter. In this context, adiabatic calorimetry is a fundamental tool that allows us to answer critical questions:

- What reactions drive failure?

- How fast do they escalate?

- Can we intervene in time?

As such, the last remaining question about adiabatic calorimetry is no longer whether we should use it or not, but instead, when we are going to start using it.